Defending Against AI-Powered Deepfakes

Thanks to AI’s nonstop improvement, it’s becoming difficult for humans to spot deepfakes in a reliable manner. This poses a serious problem for any form of authentication that relies on images of the trusted individual. However, some approaches to countering the deepfake threat show promise.

A deepfake, which is a portmanteau of “deep learning” and “fake,” can be any photograph, video, or audio that’s been edited in a deceptive manner. The first deepfake can be traced back to 1997, when a project called Video Rewrite demonstrated that it was possible to reanimate video of someone’s face to insert words that they did not say.

Early deepfakes required considerable technological sophistication on the part of the user, but that’s no longer true in 2025. Thanks to generative AI technologies and techniques, like diffusion models that create images and generative adversarial networks (GANs) that make them look more believable, it’s now possible for anyone to create a deepfake using open source tools.

The ready availability of sophisticated deepfakes tools poses serious repercussions for privacy and security. Society suffers when deepfake tech is used to create things like fake news, hoaxes, child sexual abuse material, and revenge porn. Several bills have been proposed in the U.S. Congress and several state legislatures that would criminalize the use of technology in this manner.

The impact on the financial world is also quite significant, in large part because of how much we rely on authentication for critical services, like opening a bank account or withdrawing money. While using biometric authentication mechanisms, such as facial recognition, can provide greater assurance than passwords or multi-factor authentication (MFA) approaches, the reality is that any authentication mechanism that relies on images or video in part to prove the identity of an individual is vulnerable to being spoofed with a deepfake.

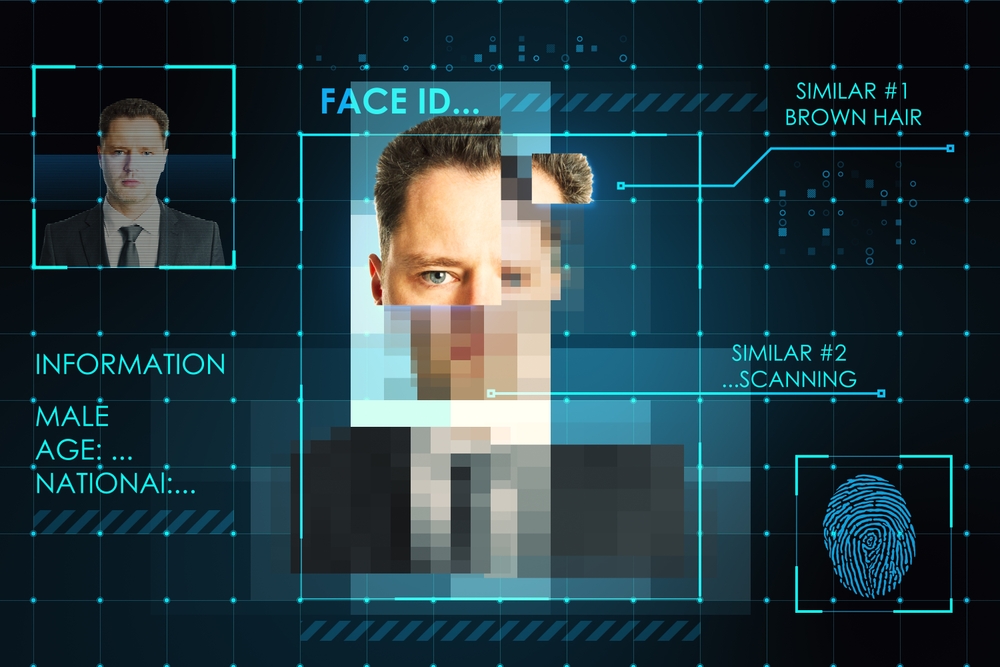

The deepfake (left) image was created from the original on the right, and briefly fooled KnowBe4 (Image source: KnowBe4)

Fraudsters, ever the opportunists, have readily picked up deepfake tools. A recent study by Signicat found that deepfakes were used in 6.5% of fraud attempts in 2024, up from less than 1% attempts in 2021, representing more than a 2,100% increase in nominal terms. Over the same period, fraud in general was up 80%, while identity fraud was up 74%, it found.

“AI is about to enable more sophisticated fraud, at a greater scale than ever seen before,” Consult Hyperion CEO Steve Pannifer and Global Ambassador David Birch wrote in the Signicat report, titled “The Battle Against AI-driven Identity Fraud.” “Fraud is likely to be more successful, but even if success rates stay steady, the sheer volume of attempts means that fraud levels are set to explode.”

The threat posed by deepfakes is not theoretical, and fraudsters currently are going after large financial institutions. Numerous scams were cataloged in the Financial Services Information Sharing and Analysis Center’s 185-page report.

For instance, a fake video of an explosion at the Pentagon in May 2023 caused the Dow Jones to fall 85 points in four minute. There is also the fascinating case of the North Korean who created fake identification documents and fooled KnowBe4–the security awareness firm co-founded by the hacker Kevin Mitnick (who died in 2023)–into hiring him or her in July 2024. “If it can happen to us, it can happen to almost anyone,” KnowBe4 wrote in its blog post. “Don’t let it happen to you.”

However, the most famous deepfake incident arguably occurred in February 2024, when a finance clerk at a large Hong Kong company was tricked when fraudsters staged a fake video call to discuss the transfer of funds. The deepfake video was so believable that the clerk wired them $25 million.

There are hundreds of deepfake attacks every day, says Andrew Newell, the chief scientific officer at iProov. “The threat actors out there, the rate at which they adopt the various tools, is extremely rapid indeed,” Newell said.

The big shift that iProov has seen over the past two years is the sophistication of the deepfake attacks. Previously, utilizing deepfakes “required quite a high level of expertise to launch, which meant that some people could do them but they were fairly rare,” Newell told BigDATAwire. “There’s a whole new class of tools which make the job incredibly easy. You can be up and running in an hour.”

iProov develops biometric authentication software that’s designed to counter the growing effectiveness of deepfakes in remote online environments. For the most high-risk users and environments, iProov uses a proprietary flashmark technology during sign-in. By flashing different colored lights from the user’s device onto his or her face, iProov can determine the “liveness” of the individual, thereby detecting whether the face is real or a deepfake or a face-swap.

It’s all about putting roadblocks in front of would-be deepfake fraudsters, Newell says.

“What you’re trying to do is to make sure you have a signal that is as complex as you possibly can, whilst making the task of the end user as simple as you possibly can,” he says. “The way that light bounces off a face it’s highly complex. And because the sequence of colors actually changes every time, it means if you try and fake it, that you have to fake it almost in actual real time.”

The authentication company AuthID uses a variety of techniques to detect the liveness of individuals during the authentication process to defeat deepfake presentation attacks.

“We start with passive liveness detection, to determine that the id as well as the person in front of the camera are in fact present, in real time. We detect printouts, screen replays, and videos,” the company writes in its white paper, “Deepfakes Counter-Measures 2025.” “Most importantly, our market-leading technology examines both the visible and invisible artifacts present in deepfakes.”

Defeating injection attacks–where the camera is bypassed and fake images are inserted directly into computers–is tougher. AuthID uses multiple techniques, including determining the integrity of the device, analyzing images for signs of fabrication, and looking for anomalous activity, such as validating images that arrive at the server.

“If [the image] shows up without the right credentials, so to speak, it’s not valid,” the company writes in the white paper. “This means coordination of a kind between the front end and the back. The server side needs to know what the front end is sending, with a type of signature. In this way, the final payload comes with a star of approval, indicating its legitimate provenance.”

The AI technology that enables deepfake attacks is liable to improve in the future. That is putting pressure on companies to take steps to fortify their authentication process now or risk letting the wrong people into their operation.

Related Items:

Deepfakes, Digital Twins, and the Authentication Challenge

U.S. Army Employs Machine Learning for Deepfake Detection

New AI Model From Facebook, Michigan State Detects & Attributes Deepfakes

The post Defending Against AI-Powered Deepfakes appeared first on BigDATAwire.