South Korea’s AI Framework May Challenge Startups Ahead of 2026 Implementation

The post South Korea’s AI Framework May Challenge Startups Ahead of 2026 Implementation appeared on BitcoinEthereumNews.com.

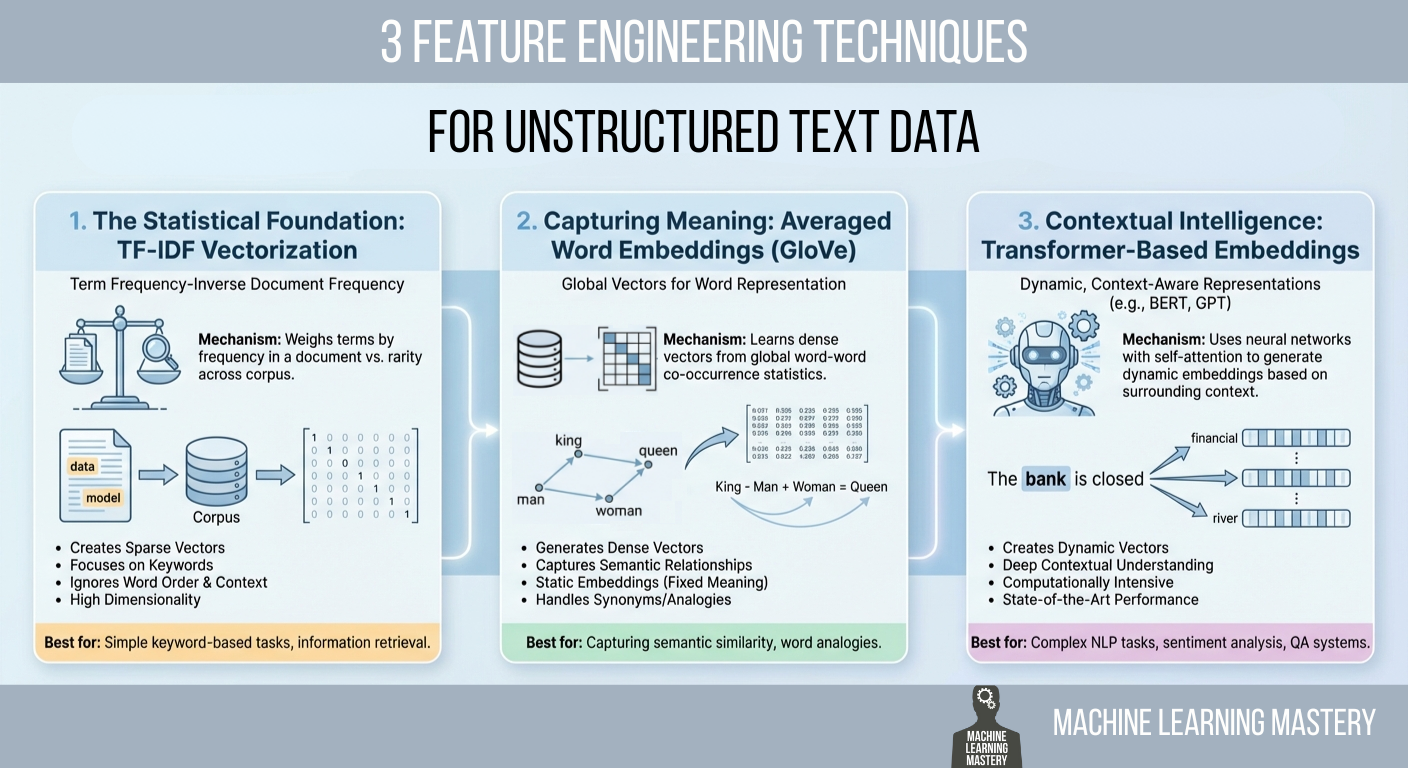

South Korea’s new AI Framework Act, effective January 22, 2026, establishes a national AI committee, a three-year AI plan, and safety requirements including transparency disclosures for AI systems, aiming to balance innovation with ethical oversight amid industry concerns. The act will create a national AI committee to oversee development and implementation. It mandates a basic three-year AI plan to guide national strategy in artificial intelligence. Safety measures include transparency obligations, with 98% of surveyed AI firms reporting unpreparedness per Startup Alliance data. South Korea AI regulations take effect in 2026, introducing oversight for AI growth. Discover impacts on startups and global comparisons in this detailed analysis. What is South Korea’s AI Framework Act? South Korea’s AI Framework Act is a comprehensive law designed to regulate the artificial intelligence sector by promoting safe and ethical development. Set to take effect on January 22, 2026, it addresses concerns from businesses about stifling innovation while establishing essential guardrails. The legislation will foster a structured environment for AI advancement without overburdening smaller firms. How will the new AI regulations affect South Korean startups? The upcoming regulations in South Korea AI regulations are sparking unease among startups, with a Startup Alliance survey revealing that 98% of 101 local AI companies lack a compliance system. Of these, 48.5% admitted unfamiliarity with the law, while another 48.5% felt inadequately prepared despite some efforts. Industry officials warn that the tight timeline could force service disruptions or suspensions post-January 22, potentially driving firms to launch abroad where rules are less stringent, such as in Japan. Key requirements like mandatory watermarking for AI-generated content aim to combat deepfakes but raise practical issues. An official from an AI content company noted that labeling could deter consumers, even for high-quality outputs involving extensive human input. The lack of clear guidelines, without input…