Why Does Building AI Feel Like Assembling IKEA Furniture?

Like most new IT paradigms, AI is a roll-your-own adventure. While LLMs might be trained by others, early adopters are predominantly building their own applications out of component parts. In the hands of skilled developers, this process can lead to competitive advantage. But when it comes to connecting tools and accessing data, some argue that there has to be a better way.

Dave Eyler, the vice president of product management at database maker SingleStore, has some thoughts on the data side of the AI equation. Here is a recent Q&A with Eyler:

BigDATAwire: Is the interoperability of AI tools a challenge for you or for others?

Dave Eyler: It’s really a challenge for both: you need interoperability to make your own systems run smoothly, and you need it again when those systems have to connect with tools or partners outside your walls. AI tools are advancing quickly, but they’re often built in silos. Integrating them into existing data systems or combining tools from different vendors is critical, but can feel like assembling furniture without instructions. Technically possible, but messy and more time-consuming than necessary. That’s why we see modern databases becoming the connective tissue that makes these tools work together more seamlessly.

BDW: What interoperability challenges exist? If there’s a problem, what is the biggest issue?

DE: The biggest issue is data fragmentation; AI thrives on context, and when data lives across different clouds, formats, or vendors, you lose that context. Have you ever tried communicating with someone who speaks a different language? No matter how well each of you speaks your own language, the two aren’t compatible, and communication is clunky at best. Compatibility between tools is improving, but standardization is still lacking, especially when you’re dealing with real-time data.

BDW: What’s the potential danger of interoperability issues? What problems does a lack of interoperability cause?

DE: The risk is twofold: missed opportunities and bad decisions. If your AI tools can’t access all the right data, you might get biased or incomplete insights. Worse, if systems aren’t talking to each other, you lose precious time connecting the dots manually. And in real-time analytics, speed is everything. We’ve seen customers solve this by centralizing workloads on a unified platform like SingleStore that supports both transactions and analytics natively.

BDW: How are companies addressing these challenges today, and what lessons can others take?

DE: Many companies are tackling interoperability by investing in more modern data architectures that can handle diverse data types and workloads in one place. Rather than stitching together a patchwork of tools, they’re unifying data pipelines, storage, and compute to reduce those lags and communication stumbles that have historically been an issue for developers. They’re also prioritizing open standards and APIs to ensure flexibility as the AI ecosystem evolves. The earlier you build on a platform that eliminates silos, the faster you can experiment and scale AI initiatives without hitting integration roadblocks.

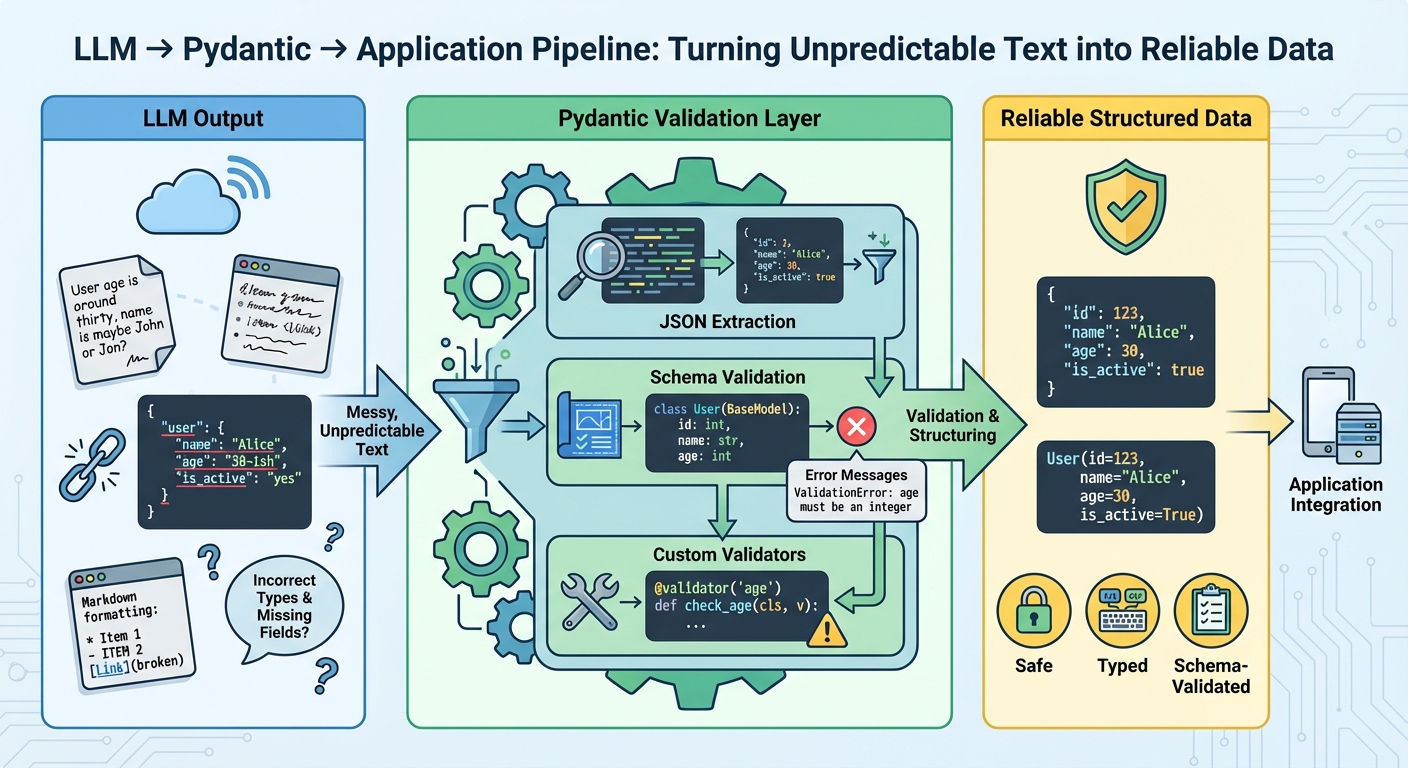

Interoperability is also the main reason SingleStore released its MCP Server. Model Context Protocol (MCP) is an open standard enabling AI agents to securely discover and interact with live tools and data. MCP servers expose structured “tools” (e.g., SQL execution, metadata queries) allowing LLMs like Claude, ChatGPT or Gemini to query databases, APIs or even trigger jobs, going beyond static training data. This is a big step in making SingleStore more interoperable with the AI ecosystem, and one others in the industry are also adopting.

BDW: Where do you see interoperability evolving over the next one to two years, and how should enterprises prepare?

DE: In the near term, we expect interoperability to become less about point-to-point integrations and more about database ecosystems that are inherently connected. Vendors are under pressure to make their AI tools “play well with others,” and customers will increasingly favor platforms that deliver broad out-of-the-box compatibility. Businesses should prepare by auditing their current data landscape, identifying where silos exist, and consolidating where possible. At the same time, the pace of AI innovation is creating unprecedented demand for high-quality, diverse data, and there simply isn’t enough readily available to train all the models being built. Those that move early will be positioned to take advantage of AI’s rapid evolution, while others may find themselves stuck solving yesterday’s plumbing problems.

The post Why Does Building AI Feel Like Assembling IKEA Furniture? appeared first on BigDATAwire.