Can You Afford to Run Agentic AI in the Cloud?

The emergence of agentic AI is putting fresh pressure on the infrastructure layer. If Nvidia CEO Jensen Huang is correct in his assumptions, demand for accelerated compute will increase by 100x as enterprises deploy AI agents based on reasoning models. Where will customers get the necessary GPUs and servers to run these inference workloads? The cloud is one obvious place, but some warn it could be too expensive.

When ChatGPT landed on the scene in late 2022 and early 2023, there was a Gold Rush mentality, and companies opened up the purse strings to explore different approaches. Much of that exploration was done in the cloud, where the costs for sporadic workloads can be lower. But now as companies zero in on what sort of AI they want to run on a longterm basis–which in many cases will be agentic AI–the cloud doesn’t look as good of an option.

One of the companies helping enterprises to move AI from proof of concept to deployed reality is H2O.ai. a San Francisco-based provider of predictive and generative AI solutions. According to H2O’s founder and CEO, Sri Ambati, its partnership with Dell to deploy on-prem AI factories at customer sites is gaining steam.

“People threw a lot of things in the exploratory phase, and there was unlimited budgets a couple of years ago to do that,” Ambati told BigDATAwire in an interview at GTC 2025 in San Jose this week. “People just starting have that mindset of unlimited budget. But when they go from demos to production, from pilots to production…it’s a long journey.”

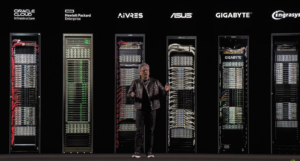

These 16 OEMs are currently shipping systems with Nvidia’s latest Blackwell GPUs, as Huang demonstrated during his keynote at GTC 2025 this week.

In many cases, that journey involves analyzing the cost of turning words into tokens that are processed by the large language models (LLMs), and then turning the output back into words that are presented to the user. The emergence of reasoning models changes the token math, in large part because there are many more steps in the chain-of-thought reasoning done with reasoning models, which necessitates many more tokens. Ambati said he doesn’t believe that it’s a 100x difference, as the reasoning models will be more efficient than Huang claimed. But efficiency demands better bang-for-your-buck, and for many that means moving on-prem, he said.

“On-prem GPUs are about a third of the cost of cloud GPUs,” Ambati said. “I think the efficient AI frontier has arrived.”

Online and On-Prem

Another company seeing a resurgence of on-prem processing in the agentic AI era is Cloudera. According to Priyank Patel, the company’s corporate VP of enterprise AI, some Cloudera customers already are starting down the road to adopting agentic AI and reasoning models, including Mastercard and OCBC Bank in Singapore.

“We see definitely a lot of our customers experimenting with agents and reasoning models,” he said. “The market is moving there, not just because infrastructure providers want to go there, but also because the value is being seen by the end users as well.”

However, if inference is going to drive a 100x increase in workload, as Nvidia’s Huang said during his keynote address at GTC 2025 this week, then it doesn’t make a lot of sense to run these workloads in the public cloud, Patel told BigDATAwire at the Nvidia GTC 2025 conference this week.

“It feels like for the last 10 years, the world has been going to the cloud,” he said. “Now they’re taking a second hard look.”

Cloudera designed its data platform as a hybrid cloud offering, customers can easily move workloads where they need to. Customers don’t need to retool their applications to run them somewhere else, Patel said. The fact that Cloudera customers are looking to run agentic AI applications on-prem indicates the financials don’t make much sense to do it in the cloud, he said.

“The cost of ownership of doing training, tuning, even large-scale inferencing like the ones that Nvidia talks about for agentic AI in the future, is a much better TCO argument driven on prem, or driven with owned infrastructure within data centers, as opposed to just on rented by the hour instances on the cloud,” Patel said. “On the data center side, you’re paying for it once, and then you have it. Theoretically, you’re not essentially adding on to cost if you’re using more of it.”

Get Thee to the FinOps-ery

The three big public clouds, AWS, Microsoft Azure, and Google Cloud, have seen tremendous growth over the years in the amount of data they’re storing and the amount of data they’re processing for customers. The growth has been so great that people have grown a bit complacent about trying to align the cost of the services with the value they get out of them.

According to Akash Tayal, the cloud engineering offering lead at Deloitte, the amount of money enterprises waste in the cloud generally ranges from 20% to 40%.

“There’s a lot of waste in the cloud,” Tayel told BigDATAwire. “It’s not that people haven’t thought of it. It’s that as you start getting into the cloud, you get new ideas, the technology evolves on you, there’s new services available.”

Customers who just lift-and-shift their existing application into the cloud and don’t change how they consume resources are most likely to waste money, Tayal said. That’s also the easiest of the 10% of total waste to recoup, he said. Eliminating the rest of the changes requires more careful monitoring and reengineering applications, which is harder to do, he said. It’s also the focus of his FinOps practice at Deloitte, which has been growing strongly over the past few years.

Tayal defended the public clouds’ records when it comes to innovation. Those who are using the cloud to try out new technology and develop new applications are more likely to be getting better value out of the cloud, he said. Training or fine-tuning a model doesn’t require 24x7x365, always-on resources, so spinning up rented GPUs in the cloud could make sense.

Agentic AI is still a nascent technology, so there’s a lot of innovation occurring there that could be done in the cloud. But as that innovation turns into production use cases that need to be always on, enterprises need to take a hard look at what they’re running and how they’re running it. That’s where FinOps comes into play, Tayal said.

“If I actually use a workload that was persistent, taking an example of an ERP or something like that, and I started using these on demand services for it, running the meter 24 over seven for the whole month is not advisable,” Tayel said.

We’re still a long way from agentic AI becoming as necessary a workload as an ERP system (or as predictable of a workload for that matter). However, the costs of running agentic AI are potentially much larger than a well-established and efficient ERP system, which should force customers to analyze their costs and apply emerging FinOps principles much sooner.

Alternative Clouds

The truth is the public clouds have developed a reputation for overcharging customers. Sometimes those accusations are fair, but other times they are not. Regardless of whether the public clouds are intentionally gouging customers or not, there are dozens of alternative cloud companies that have popped up that are more than happy to provide data storage and compute resources at a fraction of the cost of the big guys.

One of those alternative clouds is Vultr. The company was at GTC 2025 this week to let folks know that they have the latest, greatest GPUs from Nvidia, the HGX B200, ready to start doing AI.

“Basically it’s a play against the cloud giants. So it’s an alternative option,” said Kevin Cochrane, the company’s chief marketing officer. “We’re going to save you between 50% and 90% on the cost of cloud compute, and it’s going to free up enough capital to basically invest in your GPUs and your new AI initiative.”

Vultr isn’t trying to stand entirely between enterprises and AWS, for instance. But running exclusively on one cloud for all your workloads may not make financial sense, Cochrane said.

“You’re just trying to deploy a customer service agent. My God, are you really going to spend $10 million on AWS when you can do it on us for a fraction of the cost?” he said. “If we can get you up and running in half the time and half the cost, wouldn’t that be beneficial for you?”

Vultr was launched in 2014 by a group of engineers who wanted to offer solid infrastructure at a fair price. The company bought a bunch of servers, storage arrays, and networking gear, and installed it in colocation facilities around the country. Gradually the company expanded and today it’s in hundreds of data centers around the world. It hired its first marketing person (Cochrane) three years ago, and recently passed the $100 million revenue mark. The company is “immensely profitable,” Cochrane said, and is entirely self bootstrapped, meaning it hasn’t taken any venture or equity funding.

If Amazon is a luxury yacht with an abundance of amenities, then Vultr is a speedboat with a cooler full of drinks and sandwiches bouncing around the back. It’s going to get you where you need to go quickly, but without the costly comfort and padding.

“You’re funding a highly capital-intensive business. Every penny matters, right?” Cochrane said. “The reality is Amazon has all these wonderful services and all these wonderful services have very large product engineering teams and very large marketing budgets. They don’t make money. So how do you fund all this other stuff that does not actually make you money? It’s the cost of EC2, S3 and bandwidth are wildly inflated to cover the cost of everything else.”

While Vultr offers a range of compute services, it’s embracing its reputation as cloud GPU specialist. As the agentic AI era dawns and drives demands for compute up, Vultr wants customers to rethink how they’re architecting their systems.

“Once you actually build something and then deploy it, you have to scale it across hundreds or thousands of different nodes, so the runtime demand always dwarfs the build demand 100% of the time by an order of magnitude or two,” Cochrane said. “We believe that we’re at the dawn of a new 10-year cycle of compute architecture that’s the union of CPUs and GPUs. It’s taking-cloud native engineering principles and now applying it to AI.”

Related Items:

Nvidia Preps for 100x Surge in Inference Workloads, Thanks to Reasoning AI Agents

Nvidia Touts Next Generation GPU Superchip and New Photonic Switches

AI Lessons Learned from DeepSeek’s Meteoric Rise

The post Can You Afford to Run Agentic AI in the Cloud? appeared first on BigDATAwire.