FlashBlade//EXA Moves Data at 10+ TB/sec, Pure Storage Says

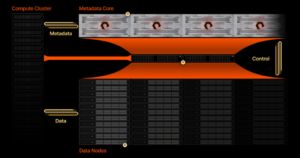

Pure Storage today unveiled FlashBlade//EXA, a new all-flash storage array designed to meet the demanding needs of AI factories and multi-modal AI training. FlashBlade//EXA separates the metadata layer from the data path in the I/O stream, which Pure says enables the array to move data rates exceeding 10 terabytes per second per namespace.

FlashBlade//EXA is an expansion of Pure Storage’s existing offerings, including FlashBlade//S, its high-performance array for file and object workloads, as well as FlashBlade//E, its large scale array designed for storing unstructured data.

The new array splits the high-speed I/O into two parts. The metadata is routed through the metadata core component of the FlashBlade//EXA, which is based on high-speed DirectFlash Module (DFM) nodes that house the company’s scale-out distributed key-value store. The metadata core nodes run on the Purity//FB operating system, which has been bolstered with support for Parallel NFS (pNFS) to communicate with the compute nodes.

Block data is separately routed over Remote Direct Memory Access (RDMA) to the data nodes, which are industry standard Linux-based servers with this release (the company plans to incorporate its DFM tech in a future release). This architecture allows FlashBlade//EXA to reach the maximum allowable bandwidth between the data storage and the compute nodes.

“This segregation provides non-blocking data access that increases exponentially in high-performance computing scenarios where the metadata requests can equal, if not outnumber, data I/O operations,” writes Alex Castro, a Pure Storage vice president, in a blog post.

FlashBlade//EXA separates the metadata stream from the block data stream, removing an I/O bottleneck, Pure Storage says

When Sun Microsystems created NFS back in 1984, functionality was the primary focus, not performance, Castro says. However, legacy NAS devices that require more I/O controllers to be added with each new data node have created a bottleneck to performance. Splitting the I/O is the key to unlocking the bottleneck created by legacy NAS arrays, he says.

“Many storage vendors targeting the high-performance nature of large AI workloads only solve for half of the parallelism problem–offering the widest networking bandwidth possible for clients to get to data targets,” Castro writes. “They don’t address how metadata and data are serviced at massive throughput, which is where the bottlenecks at large scale emerge.”

Some storage vendors have resorted to using specliazed file systems, such as Lustre, to deliver the parallelism needed for large scale projects, Castro writes, but these environments were prone to metadata latency and required Phd-level skills to manage. On the other side, other vendors have inserted a compute aggregation layer between the compute clients and the data source.

“This model suffers from expansion rigidity and more management complexity challenges than pNFS when scaling for massive performance because it involves adding more moving parts with compute aggregation nodes,” Castro says. “This rigidity forces data and metadata to scale in lockstep, creating inefficiencies for multimodal and dynamic workloads.”

Pure says it developed the FlashBlade//EXA to meet the emerging needs of “AI factories,” and in particular the need to keep thousands of high-end GPUs fed with data.

In terms of scale, AI factories sit in the middle. On the low end are enterprise AI workloads, such as inference and RAG, that work on 50TB to 100PB of data, while AI factories will need access to up to 10,000 GPUs on data sets from 100PB to multiple exabytes. At the high end, hyperscalers can have upwards of 100EBs and more than 10,000 GPUs. At all levels, having idle GPUs is an impediment to productivity.

“Data is the fuel for enterprise AI factories, directly impacting performance and reliability of AI applications,” Rob Davis, Nvidia’s vice president of storage networking technology, said in a press release. “With Nvidia networking, the FlashBlade//EXA platform enables organizations to leverage the full potential of AI technologies while maintaining data security, scalability, and performance for model training, fine tuning, and the latest agentic AI and reasoning inference requirements.”

Pure Storage says it expects to start shipping FlashBlade//EXA this summer.

Related Items:

AI to Goose Demand for All Flash Arrays, Pure Storage Says

Why Object Storage Is the Answer to AI’s Biggest Challenge

Pure Storage Rolls All-QLC Flash Array

The post FlashBlade//EXA Moves Data at 10+ TB/sec, Pure Storage Says appeared first on BigDATAwire.