HPE Preps for the AI Era with Updated Data Fabric, Storage, and Compute Offerings

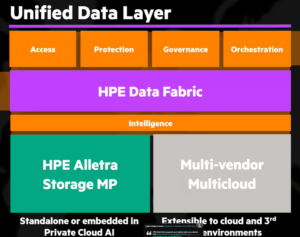

Enterprises need a whole lot of data to power their AI applications. That includes structured and unstructured data, block and file data, streaming and static data, and data in the cloud and on the edge. To help keep all that data straight, Hewlett Packard Enterprises today rolled out a reimagining of its HPE Data Fabric offering. It also bolstered its Alletra storage appliances and launched a new GPU compute offering dubbed Nvidia AI Computing by HPE.

HPE debuted its first data lakehouse solution back in 2021 when it launched the Ezmeral Data Fabric Object Store as part of its GreenLake Cloud Service. The goal of the software was to provide a standardized way to ingest, store, and serve the large variety of data types that enterprises wanted to use for analytics.

While analytics is still important, what’s really driving the needle in big data today is AI as well as agentic AI. To that end, HPE today made a series of announcements around its “new unified data layer,” with the stated goal of helping customers deliver on their AI strategies. Specifically, HPE announced that its data fabric now works with Alletra Storage MP X10000 appliance.

HPE has had the data fabric product in the market for a number of years and has a lot of happy data lakehouse customers, said Jim O’Dorisio, the SVP and GM of HPE Storage, in a press briefing last week.

“It’s been very effective at that,” he continued. “But increasingly, we’ve built out the global namespace capability of the product that helps us deal with this distributed data challenge from edge to cloud. And it’s not only distributed, it’s heterogeneous. So this ability to provide a single namespace from edge to cloud across a heterogeneous set of data sources to deliver universal access multi-protocol support, automated tiering and security is absolutely foundational to enabling data.”

As part of the integration of the HPE Data Fabric Software with its MP X10000 appliance, HPE is delivering a new, automated, inline metadata tagging engine that it says is designed to create AI-ready object data.

“This is a really cool capability,” O’Dorisio said. “It allows customers to literally chat with their data almost immediately upon ingestion. And this is very unique because we’re actually enriching the data as we’re ingesting the data.”

HPE also provided an update on the plan it announced in November to work with Nvidia to add support for Remote Direct Memory Access (RDMA) between X10000 and Nvidia.

“We have completed that functionality,” O’Dorisio said. “It will be rolled out in this coming release, and this offers up to 6x performance improvement from both a bandwidth and a latency perspective via RDMA interfaces.”

HPE launched the Alletra Storage MP series, you will remember, two years ago to provide expandable storage appliances as part of the GreenLake cloud service. HPE tapped its partner VAST Data to provide the file storage capability, to go along with the Alletra appliance’s existing support for bock storage.

HPE also made several improvements to Alletra Storage MP B10000, the block storage arrays that it sells as part of the GreenLake Cloud. For starters, HPE added file access to the MP B10000 arrays to go along with the block capability. That led HPE to claim that it’s the first and only vendor “to support disaggregated scale-out unified block and file on a single operating system (OS) and storage architecture.”

HPE also delivered a new service dubbed HPE Alletra Block Storage for Azure, which adds block storage capabilities for hybrid cloud environments on Microsoft Azure. It also added support for ransomware protection via partner Zerto.

Separately, HPE announced enhancements to HPE Private Cloud AI. For starters, it’s bolstering its GPU compute offering, dubbed Nvidia AI Computing by HPE, which the company launched last year and which it says encompasses “a full-stack, turnkey private cloud for AI.” This environment will get the latest Nvidia GPUs, including Blackwell and Grace Blackwell chips, and Nvidia AI software, the company says.

“We are maniacally focused on time to market with the latest Nvidia innovation,” Fidelma Russo, HPE CTO, executive vice president, and GM of Hybrid Cloud, said during a press briefing last week. “The real function of this is to make sure that we can deliver the technology that is fueled by AI to our customers…and show them how to get economic value out of those technologies.”

HPE also bolstered its HPE Private Cloud AI offering with several other updates, including support for the aforementioned HPE Data Fabric. It also gains a new developer system that includes “an integrated control node, end-to-end AI software and 32TB of integrated storage.”

Supporting AI workloads on the edge is a must for HPE, specifically when it comes to AI inferencing workloads, CTO Russo said on the press call. “As we all know, we are in the age of GenAI,” Russo said. “We’ve started with training, and we’ve seen…tremendous acceleration of training across the board over the last couple of years. We’re now starting to approach the place where inferencing is going to become a bigger part of the story, and especially inferencing at the edge.”

Lastly, HPE Private Cloud AI also gets support for Nvidia “blueprints,” which gives customers access to a library of pre-built AI software, specifically the Multimodal PDF Data Extraction Blueprint and Digital Twins blueprints. HPE announced it’s improving its observability capabilities in HPE OpsRamp by adding support for GPU optimization via HPE Private Cloud AI.

HPE made these announcements as Nvidia’s GPU Technology Conference (GTC) 2025 gets underway in San Jose, California.

Related Items:

HPE Taps VAST Data For Fast File Storage on GreenLake

HPE Adds Lakehouse to GreenLake, Targets Databricks

HPE Cranks the Density on GreenLake NAS

The post HPE Preps for the AI Era with Updated Data Fabric, Storage, and Compute Offerings appeared first on BigDATAwire.