Nvidia Preps for 100x Surge in Inference Workloads, Thanks to Reasoning AI Agents

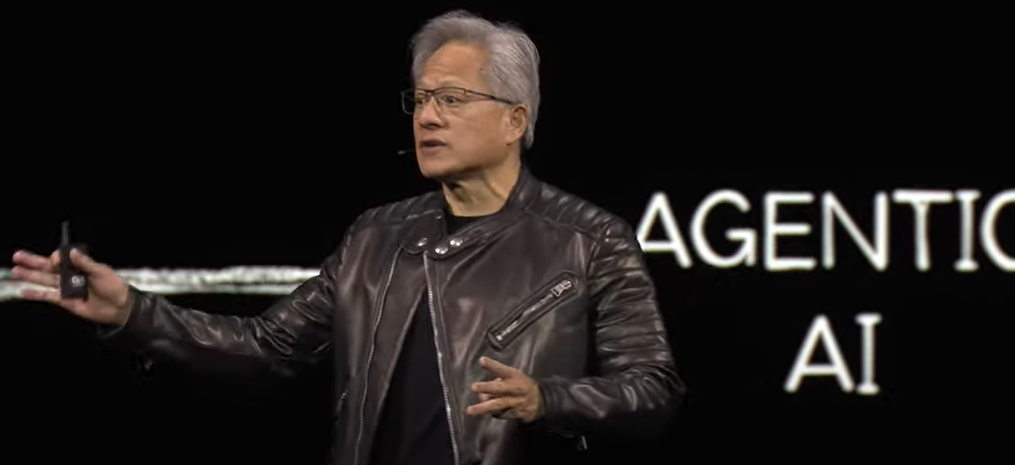

The emergence of agentic AI powered by reasoning models will have a transformative effect on the computer industry, not just on how we write and run software, but how we build entire data centers, Nvidia CEO Jensen Huang said during his keynote address at the GTC 2025 conference yesterday.

The end of 2024 and beginning of 2025 brought us two interrelated AI trends, including the rise of agentic AI and emergence of reasoning models. Together, the two technologies have the potential to upend how entire industries automate their processes.

Agentic AI refers to semi- or fully autonomous AI applications, or agents, making decisions and taking actions on behalf of humans. Meanwhile, reasoning models, such as DeepSeek-R1, demonstrate the power of model distillation (building a smaller model from the results of larger models) and using a mixture of experts (MoE) approach to get better results.

Companies across industries are scrambling to build and deploy AI agents that use reasoning models to automate tasks. Nvidia and AI vendors are moving quickly to support this emerging use case, which marks the second generation of generative AI following the development of chatbots and copilots, which marked the first generation of GenAI.

Software engineers will be among the first professions impacted by AI agents Huang said in his GTC 2025 keynote address March 18 at the SAP Center in San Jose. “I’m certain that 100% of the software engineers will be AI assisted by the end of this year, and so agents will be everywhere,” he said. “So we need a new line of computers.”

If the emergence of GenAI in late 2022 supercharged demand for Nvidia’s high-end GPUs for training AI models and made it the most valuable company in the world, then the emergence of agentic AI as an inference workload has the potential to drive demand for GPUs through the roof.

“The amount of computation we have to do for inference is dramatically higher than it used to be,” Huang said. “The amount of computation we have to do is 100 times more, easily.”

Huang shared Nvidia’s GPU roadmap for the next few years. Its Blackwell chips are now shipping in volume, and the company has plans to ship a Blackwell Ultra chip in the second half of 2025. That will be followed in the second half of 2026 by the next generation of GPU chips, the Rubin, which will be paired with a Vera CPU to create a Vera Rubin superchip (much like the Grace Blackwell superchip). In the second half of 2027, Nvidia plans to ship a Vera Rubin Ultra.

But Vera Rubin Ultra is only the beginning of the story. Huang wants to completely reinvent not only how computers are built to support this emerging workload, but how entire data centers are architected. That’s because the very nature of how we interface with computers and write code is going to change thanks to agentic AI.

“Whereas in the past we wrote the software and we ran it on computers, in the future, the computers are going to generate the tokens for the software,” Huang said. “And so the computer has become a generator of tokens, not a retrieval of files. [It’s gone] from retrieval-based computing to generative-based computing.”

The old way of building data centers is going to change, Huang said. Instead of data centers, we’ll have AI factories that generate value using AI.

“It has one job and one job only: Generating these incredible tokens that we then reconstitute into music, into words, into videos, into research, into chemicals and proteins,” Huang said. “So the world is going through a transition in not just the amount of data centers that will be built, but also how it is built. Everything in the data center will be accelerated.”

Nvidia is doing its best to drive down the size of GPU-accelerated systems and to make them more efficient. It has introduced water-cooled systems, which allows them to be more dense. It’s also moving to optical networking, as Huang showed with the Spectrum-x and Quantum-x photonics equipment unveiled yesterday, which will drive more power efficiency into the data centers.

The currency of GenAI is the token. AI models turn words into tokens, process the tokens, then turn the tokens back into words (or pictures). The first generation of GenAI products, such as ChatGPT, took their best guess at answer a question in a one-shot manner, and the result was that they were often wrong. The new generation of reasoning models that will be used with agentic AI introduce a certain number of intermediate steps as part of the reasoning process, and that necessitates more tokens.

During his keynote, Huang demonstrated the difference in quality of responses and compute capacity by posing a question about seating at a wedding party. The groom and the bride had certain requirements in terms of who had to sit next to who and the best angles. ChatGPT consumed 439 tokens in generating its answer, and got it wrong. A reasoning model consumed 8,290 tokens and got the correct answer.

“So the one shot is 439 tokens. It was fast. It was effective, but it was wrong,” Huang said. The reasoning model, on the other hand, “took a lot more computation because the model’s more complex.” And it got the answer correct.

As agentic AI makes its way into corporations and data centers, it will require different hardware and different software. Software will be generated by computers instead of written by hand. Reasoning models will require 100x more compute than first-gen GenAI required. Customers will need to balance the tradeoffs between accuracy, latency, and power consumption in a way that they haven’t had to up to this point.

Judging by his keynote, Huang is looking forward to this massive shift–a shift that his company played an outsize role in instigating. The world leader in accelerated compute is pushing hard on the accelerator pedal, bringing massive change, faster and faster.

“We’ve known for some time that general-purpose computing has run out, of course, run its course, and that we need a new computing approach,” Huang said. “And the world is going through a platform shift from hand-coded software running on general purpose computers to machine learning software running on accelerators and GPUs. This way of doing computation is at this point, past this tipping point, and we are now seeing the inflection point happening, inflection happening with the world’s data center buildout.”

Related Items:

Nvidia Touts Next Generation GPU Superchip and New Photonic Switches

Nvidia Cranks Up the DGX Performance with Blackwell Ultra

AI Lessons Learned from DeepSeek’s Meteoric Rise

The post Nvidia Preps for 100x Surge in Inference Workloads, Thanks to Reasoning AI Agents appeared first on BigDATAwire.